My Home Server is struggling with high IOWait under IO load and the time has come to upgrade the HP SmartArray P410 SAS/SATA controller with Write Cache.

In Setting up a Home Server using an old (2009) HP Proliant ML150 G6 I describe how my storage pool is using a mixed set of drives. The drives are connected to two different

Hardware installation

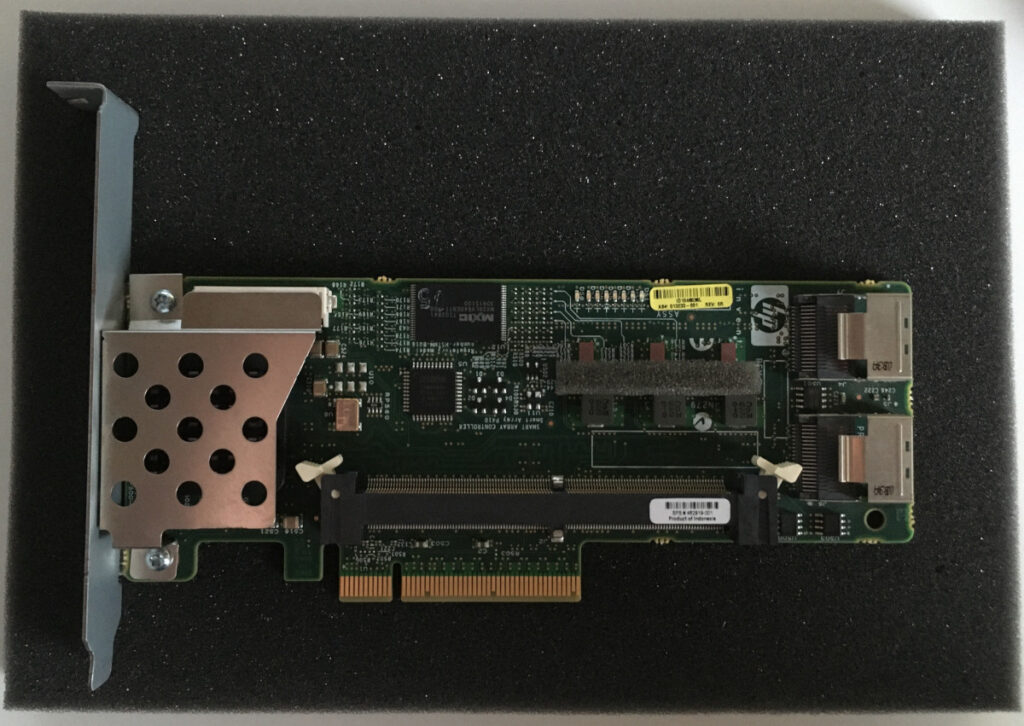

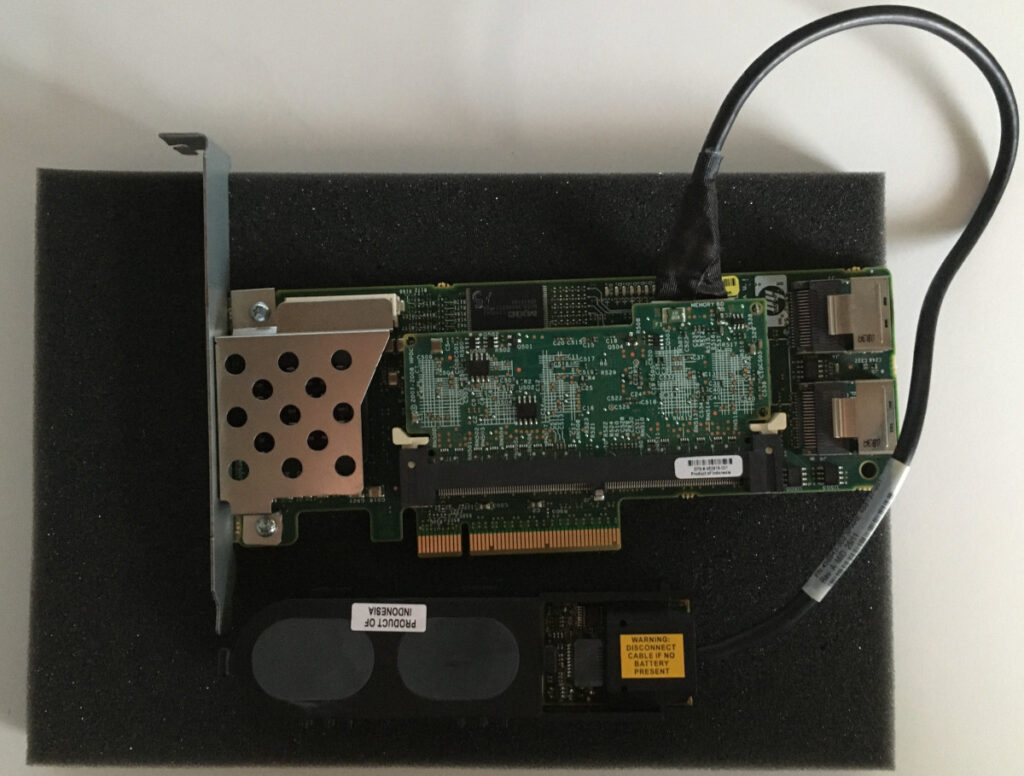

The HP Proliant ML150 G6 server that I am using for our Home Server has 2 storage controllers. It has an onboard SATA controller with 6 SATA ports. In addition to that it has an HP SmartArray P410 8 port SAS/SATA controller.

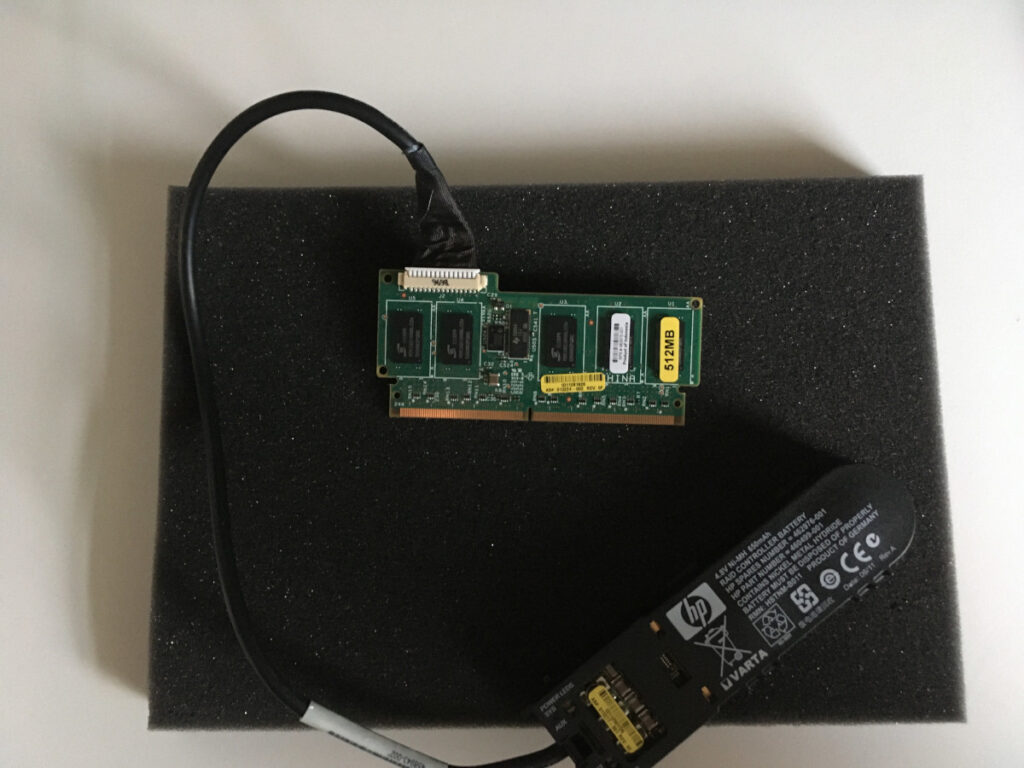

I ordered a 512MB Battery Backed Write Cache (HP 462975-001) with Battery pack. This seems to be New Old Stock and I am wondering if the battery cells are still ok. Only time will tell.

This module fits into the P410 that is currently empty. The two Mini-SAS connectors carry each 4 lanes of either SAS or SATA. In my case a single cable connects to the 4 bay SAS/SATA 3.5″ Hot-Plug drive cage.

I also purchased a Mini-SAS to 4x SATA 1m cable. In hindsight this cable is too long and I will get another – shorter – cable in the future in order to ease cable management.

The Battery Backup has no obvious place to sit. But if you look closer at the standard Front Fan blue shroud there is actually a place where you can click it in.

Drive migration

Now it is time to move the ZFS RAIDZ drives that is on the onboard SATA controller to the HP P410 controller.

Theoritically this could be a simple task – shut down the server and move the drives. Put the two Seagate Constellation ES drives back into the Hotplug Drive Cage and the Hitachi drive to the Mini-SAS to 4x SATA cable and switch the system back on. Then export each of the 3 drives as individual logical drives and thats it!

But as far as I can understand there is more to this move. If I am mistaken, you are very welcome to comment below.

So the plan is to remove one drive at a time. That will keep the RAIDZ array operational though degraded. I will then expose that drive as an individual logical drive in the HP Raid controller software. And finally replace the drive in the ZFS pool. Ideally ZFS will recognise that the drive is already a part of the pool. Then do a quick test or resilver and continue on its merry way.

… is not that easy …

Unfortunate it turned out not to be that simple. I have now migrated both the Seagate drives back into the drive cage. With both of them the story was the same as I am expecting the Hitachi drive migration will be. Thus I am only describing that migration:

First lets check the status of the ZFS pool:

<code>jan@silo:~$ sudo zpool status

pool: tank

state: ONLINE

scan: resilvered 336G in 0 days 03:51:03 with 0 errors on Mon Oct 19 03:46:52 2020

config:

NAME STATE READ WRITE CKSUM

tank ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

scsi-3600508b1001c1aab8e0bf0108295560f ONLINE 0 0 0

scsi-3600508b1001ce518f34fad016f8e5c7e ONLINE 0 0 0

ata-Hitachi_HTS545050A7E380_TEB51A3RHDXPYP ONLINE 0 0 0

scsi-3600508b1001c25eecce5f52f297aa1a3 ONLINE 0 0 0

scsi-3600508b1001cc878a5c743f335a094cd ONLINE 0 0 0

errors: No known data errors</code>The 4 scsi-**** drives are the ones already connected to the HP SmartArrap P410 controller. The ata-Hitachi drive is the last one we need to migrate.

After having powered down the server, moved the drive from the onboard controller to the Mini-SAS to 4x SATA cable this is what ZFS reports:

jan@silo:~$ sudo zpool status

pool: tank

state: DEGRADED

status: One or more devices could not be used because the label is missing or

invalid. Sufficient replicas exist for the pool to continue

functioning in a degraded state.

action: Replace the device using 'zpool replace'.

see: http://zfsonlinux.org/msg/ZFS-8000-4J

scan: resilvered 336G in 0 days 03:51:03 with 0 errors on Mon Oct 19 03:46:52 2020

config:

NAME STATE READ WRITE CKSUM

tank DEGRADED 0 0 0

raidz1-0 DEGRADED 0 0 0

scsi-3600508b1001c1aab8e0bf0108295560f ONLINE 0 0 0

scsi-3600508b1001ce518f34fad016f8e5c7e ONLINE 0 0 0

4485826815560792957 UNAVAIL 0 0 0 was /dev/disk/by-id/ata-Hitachi_HTS545050A7E380_TEB51A3RHDXPYP-part1

scsi-3600508b1001c25eecce5f52f297aa1a3 ONLINE 0 0 0

scsi-3600508b1001cc878a5c743f335a094cd ONLINE 0 0 0

errors: No known data errorsThen the HP Storage ssacli command shows:

jan@silo:~$ sudo ssacli ctrl slot=4 show config

Smart Array P410 in Slot 4 (sn: PACCRID1046036L)

Internal Drive Cage at Port 1I, Box 1, OK

Port Name: 1I

Port Name: 2I

Array A (SATA, Unused Space: 0 MB)

logicaldrive 1 (465.73 GB, RAID 0, OK)

physicaldrive 1I:1:3 (port 1I:box 1:bay 3, SATA HDD, 500 GB, OK)

Array B (SATA, Unused Space: 0 MB)

logicaldrive 2 (465.73 GB, RAID 0, OK)

physicaldrive 1I:1:4 (port 1I:box 1:bay 4, SATA HDD, 500 GB, OK)

Array C (SATA, Unused Space: 0 MB)

logicaldrive 3 (465.73 GB, RAID 0, OK)

physicaldrive 1I:1:2 (port 1I:box 1:bay 2, SATA HDD, 500 GB, OK)

Array D (SATA, Unused Space: 0 MB)

logicaldrive 4 (465.73 GB, RAID 0, OK)

physicaldrive 1I:1:1 (port 1I:box 1:bay 1, SATA HDD, 500 GB, OK)

Unassigned

physicaldrive 1I:1:32 (port 1I:box 1:bay 32, SATA HDD, 500 GB, OK)

SEP (Vendor ID PMCSIERA, Model SRC 8x6G) 250 (WWID: 5001438010C5017F)Next, let us export the unassigned physical drive as a logical drive:

jan@silo:~$ sudo ssacli ctrl slot=4 create type=logicaldrive drives=1I:1:32

jan@silo:~$ sudo ssacli ctrl slot=4 show config

Smart Array P410 in Slot 4 (sn: PACCRID1046036L)

Internal Drive Cage at Port 1I, Box 1, OK

Port Name: 1I

Port Name: 2I

Array A (SATA, Unused Space: 0 MB)

logicaldrive 1 (465.73 GB, RAID 0, OK)

physicaldrive 1I:1:3 (port 1I:box 1:bay 3, SATA HDD, 500 GB, OK)

Array B (SATA, Unused Space: 0 MB)

logicaldrive 2 (465.73 GB, RAID 0, OK)

physicaldrive 1I:1:4 (port 1I:box 1:bay 4, SATA HDD, 500 GB, OK)

Array C (SATA, Unused Space: 0 MB)

logicaldrive 3 (465.73 GB, RAID 0, OK)

physicaldrive 1I:1:2 (port 1I:box 1:bay 2, SATA HDD, 500 GB, OK)

Array D (SATA, Unused Space: 0 MB)

logicaldrive 4 (465.73 GB, RAID 0, OK)

physicaldrive 1I:1:1 (port 1I:box 1:bay 1, SATA HDD, 500 GB, OK)

Array E (SATA, Unused Space: 0 MB)

logicaldrive 5 (465.73 GB, RAID 0, OK)

physicaldrive 1I:1:32 (port 1I:box 1:bay 32, SATA HDD, 500 GB, OK)

SEP (Vendor ID PMCSIERA, Model SRC 8x6G) 250 (WWID: 5001438010C5017F)

Now on to replace the ‘failed’ drive with the ‘new’ drive – physically they are the same. First we need to find the name of the new drive:

jan@silo:~$ ls /dev/disk/by-id/scsi*

/dev/disk/by-id/scsi-3600508b1001c1aab8e0bf0108295560f

/dev/disk/by-id/scsi-3600508b1001c1aab8e0bf0108295560f-part1

/dev/disk/by-id/scsi-3600508b1001c1aab8e0bf0108295560f-part9

/dev/disk/by-id/scsi-3600508b1001c25eecce5f52f297aa1a3

/dev/disk/by-id/scsi-3600508b1001c25eecce5f52f297aa1a3-part1

/dev/disk/by-id/scsi-3600508b1001c25eecce5f52f297aa1a3-part9

/dev/disk/by-id/scsi-3600508b1001cc878a5c743f335a094cd

/dev/disk/by-id/scsi-3600508b1001cc878a5c743f335a094cd-part1

/dev/disk/by-id/scsi-3600508b1001cc878a5c743f335a094cd-part9

/dev/disk/by-id/scsi-3600508b1001ce518f34fad016f8e5c7e

/dev/disk/by-id/scsi-3600508b1001ce518f34fad016f8e5c7e-part1

/dev/disk/by-id/scsi-3600508b1001ce518f34fad016f8e5c7e-part9

/dev/disk/by-id/scsi-3600508b1001cf2bda20b3d8c1555dca2

As you can see scsi-3600508b1001cf2bda20b3d8c1555dca2 is the only one not in currently in the array. So let us replace the missing drive with this drive:

jan@silo:~$ sudo zpool replace tank 4485826815560792957 scsi-3600508b1001cf2bda20b3d8c1555dca2

invalid vdev specification

use '-f' to override the following errors:

/dev/disk/by-id/scsi-3600508b1001cf2bda20b3d8c1555dca2-part1 is part of active pool 'tank'Alas – it was not to be as easy. And using -f did not solve it either. So it was Google to the rescue. I found this post that was similar and had a an answer: https://alchemycs.com/2019/05/how-to-force-zfs-to-replace-a-failed-drive-in-place/ . The trick is to wipe the drive – or at least some of it so that ZFS does not recognise it. So I did:

jan@silo:~$ sudo dd if=/dev/zero of=/dev/disk/by-id/scsi-3600508b1001cf2bda20b3d8c1555dca2 bs=4M count=10000

10000+0 records in

10000+0 records out

41943040000 bytes (42 GB, 39 GiB) copied, 474,449 s, 88,4 MB/s

jan@silo:~$ sudo zpool replace tank 4485826815560792957 scsi-3600508b1001cf2bda20b3d8c1555dca2

invalid vdev specification

use '-f' to override the following errors:

/dev/disk/by-id/scsi-3600508b1001cf2bda20b3d8c1555dca2 contains a corrupt primary EFI label.Maybe 40 GB was a bit over the top but it got the job done. But still ZFS is complaining? Let us try to force it:

jan@silo:~$ sudo zpool replace tank 4485826815560792957 scsi-3600508b1001cf2bda20b3d8c1555dca2 -fAt least ZFS did not complain this time. Let us then check the state of the pool, has it accepted the replacement drive?

jan@silo:~$ sudo zpool status

pool: tank

state: DEGRADED

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scan: resilver in progress since Thu Oct 22 08:09:03 2020

511G scanned at 1,06G/s, 76,0G issued at 162M/s, 1,63T total

15,1G resilvered, 4,55% done, 0 days 02:47:47 to go

config:

NAME STATE READ WRITE CKSUM

tank DEGRADED 0 0 0

raidz1-0 DEGRADED 0 0 0

scsi-3600508b1001c1aab8e0bf0108295560f ONLINE 0 0 0

scsi-3600508b1001ce518f34fad016f8e5c7e ONLINE 0 0 0

replacing-2 DEGRADED 0 0 0

4485826815560792957 UNAVAIL 0 0 0 was /dev/disk/by-id/ata-Hitachi_HTS545050A7E380_TEB51A3RHDXPYP-part1

scsi-3600508b1001cf2bda20b3d8c1555dca2 ONLINE 0 0 0 (resilvering)

scsi-3600508b1001c25eecce5f52f297aa1a3 ONLINE 0 0 0

scsi-3600508b1001cc878a5c743f335a094cd ONLINE 0 0 0

errors: No known data errorsIt looks like ZFS accepted the drive and is rebuilding the drive. And the storage has been operational all during this time.

Final thoughts

The upgrade of the HP SmartArray P410 took more effort than I thought it would. I was initially hoping to just install the Battery Backed Write Cache module and move the drives over. But I learned a few things so I would call it successful although it has taken longer than anticipated.

I would like to delve deeper into the ssacli command so perhaps I will cover this in a future post…